Background

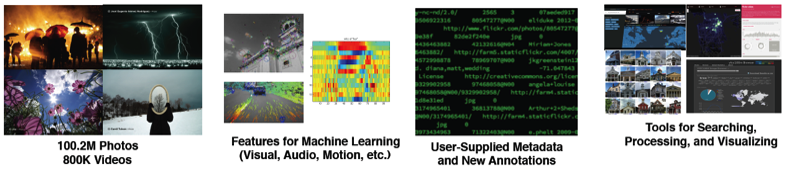

Everyday, thousands of videos, images, and websites appear on the Internet creating an ever-growing demand for methods to make them easier to retrieve, search, and index. YouTube alone claims that every minute, 100 hours worth of video material is uploaded. Most of these videos consist of consumer-produced, “unconstrained” videos from social media networks, such as YouTube uploads or Flickr content. Since many of these videos are reflecting people's everyday life experience, they constitute a corpus of never before seen scale for empirical research.

But:

- What are the methods can cope with this amount of data?

- How does one approach the problems in a University research setting, without thousands of compute cores at one's disposal?

- How does one budget and estimate the time needed to perform such experiments?

- What rigor has to be employed to make experiments robust in evaluations?

- What about repeatability and reproducibility?

Here is a quick hint: The answer is not massive computational power (as companies like Google or Amazon want you to believe).

It turns out, there are many myth in Machine Learning that this class will dispell. For example, speech recognition does not need the cloud and many machine learnings tasks do not need a GPU.

Content

This class will provide a theoretical and practical perspective on the experimental design for machine learning experiments on multimedia data.

The class consists of lectures and a hands-on component. About 50% of the lectures provide a theoretical introduction to machine learning design as an engineering discipline. The remaining 50% provide an introduction to practical signal processing for various media types, including the visual content, acoustic content, metadata, etc. Moreover, the lectures will discuss contemporary work in the field of multimedia content analysis. Guest speakers will enrich the class with their experiences. Homework is optional but useful.

For the hands-on component, student can chose a project and work on it. For example, experiments can be performed using the Multimedia Commons infrastructure on the YFCC100M corpus.

The Multimedia Commons. See http://mmcommons.org.

Lectures

1) Motivation: (Lecture Slides, for recording see Piazza). Additional Videos: Generalization, Science.

2) Scientific Process and Information, Shannon Number, Memory Equivalent Capacity: Lecture Slides.

3) Capacity of the Perceptron: Lecture Slides, Homework 1. Additional Video: No Universal Lossless Compression. Reading Recommendation: MacKay: Chapter 40, Rojas: Chapter 3.

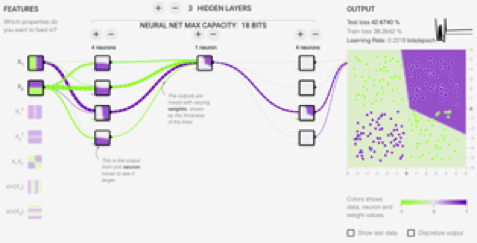

4) Capacity of Neural Networks. Lecture Slides, Demo: Tensorflow Meter

5) Estimating Expected Capacity given Data. Homework 2, Slides, Demo: Capacity estimation tools.

6) Fundamentals of Machine Learning: Generalization and Adversarial Examples. Homework 3.

7) The Relationship between Image Classification and Compression. Lecture Slides.

8) Demo: More on TFMeter, Brainome.ai, open source measurement tools. Homework 4.

9) Data Engineering for Audio I

9) Data Engineering for Audio II

10) Q&A Session

11) Multimedia Retrieval using Audio: Research Talk.

12) Data Engineering for Vision

13) Data Engineering for Vision II

14) Summary and What's Next?

Grading

To pass, students have to attend regularily and write a report related to a project as outlined below.

Master of Engineering students are required to take the final exam, everybody else is encouraged to take the final exam optionally. Weekly homework will be given which is optional but highly recommended, especially for non-graduate students.

The project counts 100% of the grade. If a final is taken, the project counts 80% of the grade and the final counts 20% of the grade. If the final was taken voluntarily, the final only improves the grade or does not count.

The final is open book. I definitely recommend bringing the Machine Learning Experimental Design Cheat Sheet.

Update: In 2021, there will be no final due to COVID-induced room constraints.

Project Requirements

Teams of 1 to 3 students enrolled in the class chose a project to either produce or reproduce a scientific result that entails machine learning. They write a paper that judges the experimental design and limits of the machine learning approach. The detailed project description is here.

The project should comment on all measurements taken and scientific methods applied. The conceptual tools for this are presented in class. The report must be in written form and can be accompanied by slides, code and videos.

Further inspiration can be found in the ACM reproducibility guidelines and the ACM SIG Multimedia reproducibility guidelines.

In this class, we do not care about accuracy as much as we care about reproducibility and scientific judgement of the experimental design. A project will not fail due to low accuracy.

Important: Group projects must describe each indvidual student's contributions in a paragraph.

Optional: Submit a paper to ACM Multimedia, ACM ICMR, IEEE MIPR, IEEE ICASSP, or another conference about your excellent results.

Enrollment

EE, CS, and data science MS and PhD students can directly enroll. Undergraduates should contact me for enrollment details. Priority will be given to URAP students of the multimedia group.

Pre-Requisites

The class requires solid programming skills, assumes familiarity with fundamental statistical concepts like the central limit theorem, probability distributions, and information measures. Familiarity with basic signal processing and computer architecture skills are helpful. Furthermore, a team-working attitude and open-mindedness towards interdisciplinary approaches is essential.

Materials

The Machine Learning Experimental Design Cheat Sheet helps with the ML engineering fundamentals of the class. The Machine Learning Experimental Process (to be revised) is an overview of the suggested experimental process and the 10 questions sheet (to be revised).

David MacKay's fantastic book (especially Chapter 40 and dependents) can be consulted for depth.

In general, supportive materials used for this class consists of contemporary research articles from conferences and journals. Details will be presented in class. I humbly recommend my textbook from Cambridge University Press. An overview of research on a large scale video analysis task is given in the Springer book. Also check out our demo on (deep) neural network capacity.

Lectures from the 2012 version of the class (before deep learning) can be accessed here.

Experimental Design for Machine Learning

on Multimedia Data

Fall 2021

CS294-082 Lecture (CCN 33046) -- Room 306 Soda -- Fridays 1-2:30pm

Note: This class will be held in person and is heavy on whiteboard content and discussion.

The 2020 lectures are online as fall back but are compressed compared to the 2021 version.